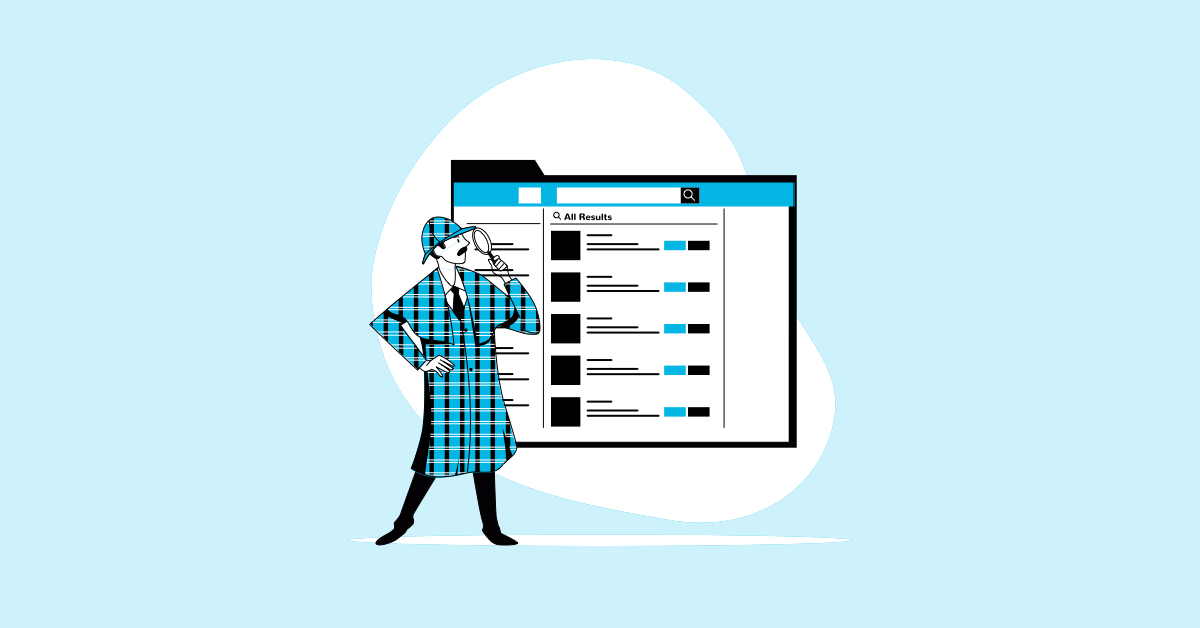

AI is clearly smart and developing at a mind boggling pace. Sometimes you might wonder, is AI like traditional software? Do you still need to test it?

The answers are no and then yes. It’s not like traditional software, and AI apps are indeed harder to test, but they still need to be tested.

LLMs are probabilistic and their output is complex, so traditional assert-based tests seem very difficult. As a result, more and more software shops are giving up and relying on user reports. This is a precarious position, because users will often lose trust in the app rather than reporting errors.

Let’s start simple. What can you test?

First of all, isolate the deterministic parts and test those the traditional way. Second, AI components need to be approached at the level of the entire system.

An Example

Here’s a RAG app architecture that’s fairly common. In fact, most RAG apps are some variant of this:

Download/Ingest → Chunk → Calculate Embedding → Store in DB → Query → LLM

This gives you an LLM app (e.g. a chatbot) that is able to answer questions based on a corpus of data. Maybe you have a pile of PDFs and you want to offer your customers a chatbot that answers questions from that library.

How do we tackle testing this thing?

First, isolate the units. Most of these steps are easily unit tested — downloading, chunking, storing & querying. Write tests for these just like any other component.

Second, the unit tests are testing too small of an area and don’t verify that the system works as a whole. We need to address that differently.

Specific to this example, chunking is the most error-prone part. If you don’t chunk, then all your documents will look the same when queried, so queries won’t surface the right content. Then again, if you make your chunks too small, they won’t have any context, and will rarely align to the actual intent of the document. The art of chunking is all about finding a happy middle ground.

While it’s easy to write unit tests for chunking, you can’t test that it works correctly without running it through the full pipeline. Some of the most effective fixes for a chunking bug are to use things like re-ranking models and late action approaches. But those are used in the query step, so therefore a lot of the testing needs to happen at the system level.

Testing AI Components

Unit testing is a 3 part process:

- Setup a specific scenario

- Execute the code

- Verify the result

When you remove step 1, it looks more like monitoring. It’s tempting to view monitoring as something for an operations or devops team, but with complex systems like AI, monitoring is a critical tool in the quality assurance toolbox.

For our example RAG pipeline, we may want to assert that:

- Every doc returned in query results is at least tangentially relevant to the question

- The LLM’s response correctly references the query results

- The LLM’s full response is consistent with the query results

All of these actually do have a ground truth without setting up a specific test scenario. The problem is those assertions seem tricky to implement. However, it’s not as tricky as it might seem, with LLMs.

The Python library DSPy is very useful for these types of monitoring-style tests. It’s a LLM framework that lets you state a problem plainly, and it’s capable of automatically calculating an LLM prompt optimized for answering that question.

import dspy

class IsChunkRelevantSignature(dspy.Signature):

document_chunk = dspy.InputField()

question = dspy.InputField()

is_relevant = dspy.OutputField(desc=”yes or no”)

_is_chunk_relevant = dspy.Predict(IsChunkRelevantSignature)

def is_chunk_relevant(chunk: str, question: str) -> bool:

result = _is_chunk_relevant(document_chunk=chunk, question=question)

return result.is_relevant == "yes"

Testing The Tests

This is amazing, right? Never before have we been able to test such opaque functionality. On the other hand, these tests are implemented with an LLM, and LLMs are known to be wrong from time to time. How do we make sure these new tests actually work?First off, write regular old unit tests for these. Check that they work in a variety of specific test cases. Do this in the dev workflow, both isolated and end-to-end. If it doesn’t work, DSPy has a variety of ways to improve, from using more complex modules like ChainOfThought or look into an optimizer.

Next, run it in production. Test every request. Use a fast model like gpt-4o-mini, or gemini-1.5-flash (the latter is one of the fastest and cheapest models available) for the tests. They should be easy questions for an LLM to answer and so don’t need to rely on a large bank of knowledge baked into a much larger model.

Finally, no matter how good these tests get, they’ll still be flaky to some extent. Which is why it’s critical to review their output in aggregate over all traffic going through the system. You might view it as

- time series — e.g. “percentage of failures per minute”

- post-hoc cohort analysis — e.g. “percentage of failures for questions about the Gamma particle”

It’s wise to monitor and analyze from both perspectives.

Conclusion

Regardless of how you view it, testing AI apps is even more important than ever. It feels difficult to test, but with a solid toolbox, it is attainable. Good luck building!

Frequently Asked Questions

Why is testing AI applications different from traditional software testing? Testing AI apps differs because AI's probabilistic nature and complex outputs make traditional assert-based tests difficult. We need to shift our focus from expecting one specific answer to evaluating ranges of acceptable outcomes. Plus, factors like data bias and the "black box" nature of AI decision-making introduce new testing challenges.

How can I test the non-deterministic parts of my AI application? While traditional unit tests work well for deterministic components, AI components often require system-level testing. Look at the entire pipeline and how the components interact. For example, in a RAG pipeline, chunking errors might only become apparent during the query phase, highlighting the need for end-to-end testing. Consider using LLM-based testing frameworks like DSPy to create tests that evaluate the system's overall behavior and responses.

How can I ensure my LLM-based tests are accurate and reliable? Even tests built with LLMs can be flawed. Start by thoroughly unit testing your LLM-based tests with various specific scenarios during development. Then, deploy them to production and test every request using a fast, cost-effective LLM. Finally, since some flakiness is inevitable, analyze the test results in aggregate, looking at time series data and cohort analysis to identify trends and potential issues.

What are some key techniques for effective AI application testing? Focus on several key areas: input data testing to ensure data integrity, real-world simulations to test unexpected scenarios, model validation to measure performance on unseen data, testing for automation bias to encourage critical thinking from users, and addressing ethical considerations like bias and fairness.

What if I need help implementing robust AI testing for my applications? Consider partnering with a specialized AI testing service provider like MuukTest. We can help you develop and implement a comprehensive testing strategy tailored to your specific needs, ensuring complete test coverage and improved software quality. We can get you up and running quickly and efficiently.

.png)