The only thing more painful than watching flaky automated tests fail is watching teams struggle to write those flaky tests in the first place. Let’s face it: test automation is hard to develop. It’s essentially a distributed system built on top of half-baked features that cause race conditions with every interaction, and test data is its own nightmare. It’s no wonder that so many automated test cases end up having so many problems. Even seasoned developers often struggle to write them well.

For a team that historically has done only manual testing, the prospect of moving towards automated testing is daunting. Automation is software development. It requires new skills and new perspectives. There are an overwhelming number of tools to consider. And if the team doesn’t build the project “right,” then their tests will crash like a train that jumped its tracks. On top of everything, the team may carry a quiet fear of losing their jobs, due to being replaced by the automation itself or being fired for failing to gain automation development skills.

Transitioning from manual to automated testing will always be painful, but with the right strategy, the team can achieve success with automation within acceptable pain tolerances. I’ve helped many teams make this transition. I’ve seen it go well, and I’ve seen it go poorly. Here’s my five-step playbook for a successful test automation transformation with as little pain as possible.

#1 Pick the Right Goals

The most important step in any test automation initiative is picking the right goals. Unfortunately, I’ve seen too many teams pick misleading goals that focus on aspects of implementation. They set goals for test numbers, such as automating 1000 tests in 6 months or automating 100% of all new tests. If there is already a large repository of manual test cases, they may seek to convert, say, 75% of those manual tests to automation. They may even create scorecards for different teams to deliver new automated tests every sprint.

Rather than setting goals focused on implementation, teams should set goals focused on business value. Teams should ask themselves how automation will change how they develop software. For example, let’s say a team spends 5 days running a suite of 200 regression tests manually once every two months before a release. If the team could automate that suite and run it automatically every night, then they would catch issues a lot sooner, and the testers could have more time to do exploratory testing before releases. Automation would be a testing force multiplier. Goals should define specific business outcomes that test automation will accomplish.

#2 Pick the Right Resources

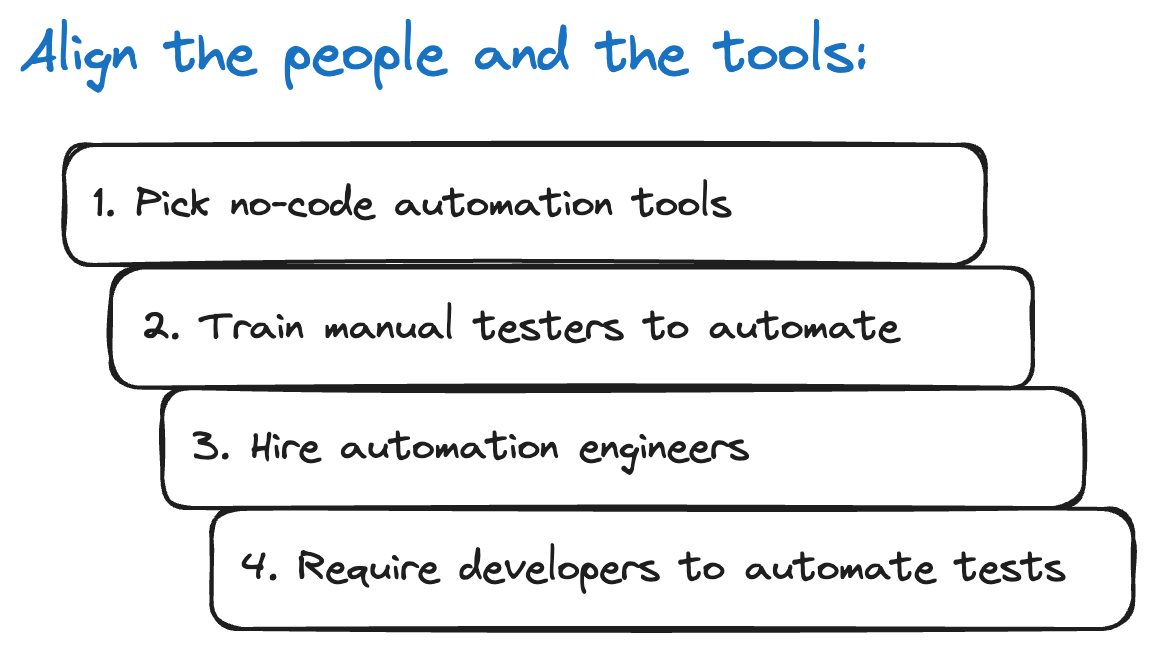

Once a team establishes their goals, the next step is to pick the right resources for achieving those goals. When I say “resources,” I mean both people and tools – they are tied together. There are four main paths for choosing resources.

The first path is picking no-code automation tools. A team’s manual testers should already know the products under test. They just need help translating their test cases into automated scripts. No-code tools provide features like screen recorders, plain-language steps builders, and self-healing locators to enable testers to automate tests without needing to know a programming language. They can be a great way for manual testers to go from zero-to-automation without needing to become programmers. However, most no-code tools available on the market are not free, and licenses could be quite costly. I’ve also found that no-code tools all have hard limits. It can be difficult to perform certain operations or scale up test execution. They’re good for getting started but perhaps may not be good for long-term sustainability.

The second path is training manual testers to become “automationeers” (short for “test automation engineers”). Manual testers on this path have two major challenges: they must first learn programming, and they must second learn automation. Neither is trivial. It can take months, if not years, to become proficient. Typically, I see this path work best for individuals but not for large groups. If leaders can identify manual testers who are both willing to pursue automationeering and demonstrate an aptitude for it, then they should support those manual testers to upskill. However, if leaders try to push all manual testers through an automation transition, it probably won’t go well. Not every tester wants to pursue automation. Not every tester will make a good automationeer. Exploratory testing is a necessary and important skill. “Big bang” transitions usually result in angry people, slow progress, and poor quality automation code.

The third path is hiring automationeers. The business may decide that the time, cost, or risk of training existing team members to develop automation is too high. Hiring test automation experts can expedite progress towards the goals, and the automation they develop will (hopefully) be done right. However, hiring may not be a possibility. Approval for job reqs may be difficult to obtain, and finding the right automationeer can be arduous.

The fourth path is requiring developers to automate tests. Developers already have deep programming skills, and they could probably learn testing techniques and frameworks relatively quickly. There is no reason why they would not be able to automate tests for the features they are developing. The tradeoff is that developers would spend less time writing product code if they spend more time writing test code. The team’s velocity will slow down, at least until the benefits of automation start helping the team move faster. Unfortunately, many developers hold a distaste for testing work, and they might not be as keen to identify appropriate cases for testing as someone whose role is dedicated to testing.

Each path has advantages and disadvantages. I don’t universally advocate for one over the others. Weigh the factors, and decide what’s best for the situation.

#3 Pick the Right Tests

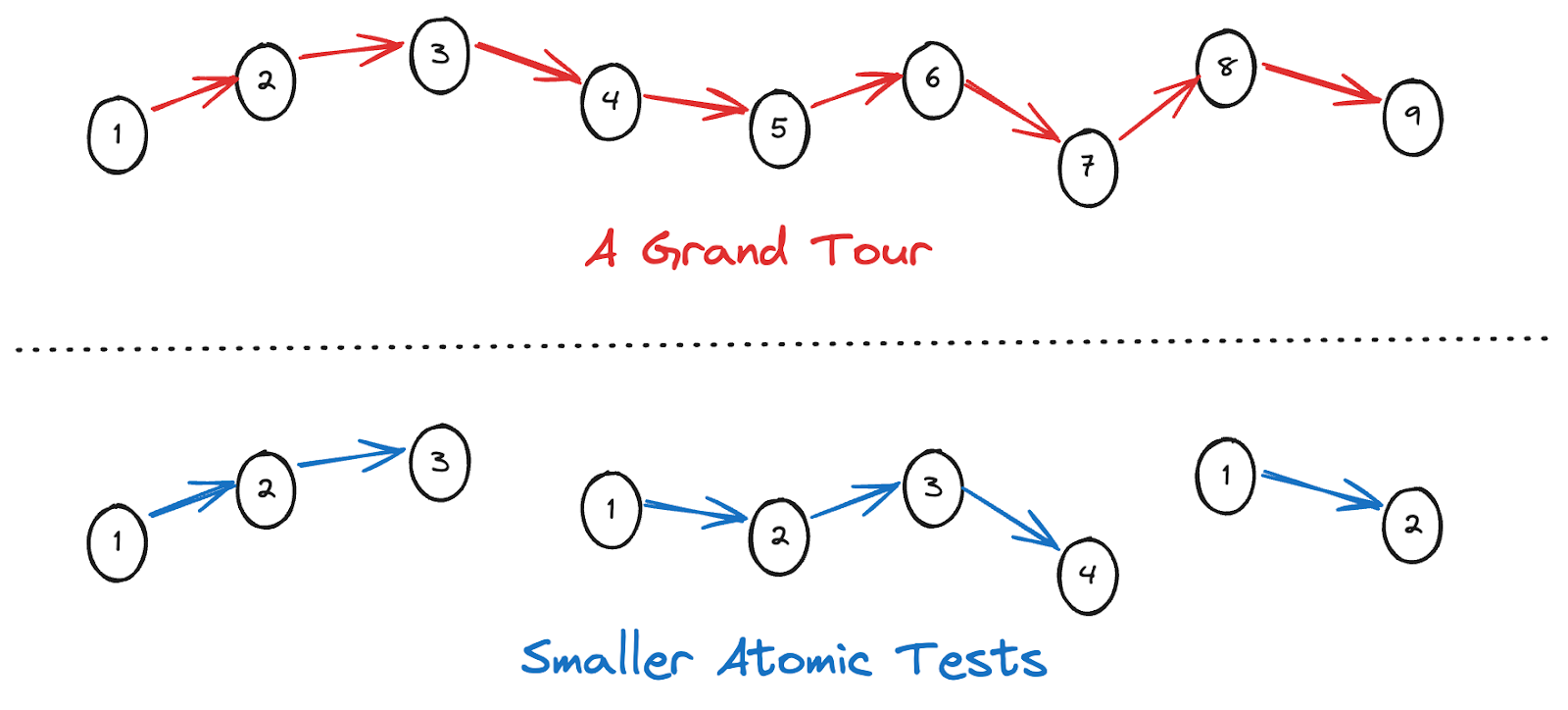

Not all test cases are suitable for automation. Manual testing favors long test cases that cover multiple behaviors. Testers take a “grand tour” through the system under test, exercising different features along their tour so they can maximize coverage in minimal time. I’ve seen manual test cases written with over a hundred steps each.

Unfortunately, those kinds of tests are not suitable for automation because they cover multiple behaviors. If a test fails, which behavior has the issue? Testers need to dig deeper to find out. The test would also have a loss of coverage because any steps after the failure would not be run. Furthermore, each step is a potential point of failure. A test with more steps has a higher risk of crashing.

Automated tests should be short, atomic, and independent. Each test should cover exactly one main behavior. If a team is starting with a repository of tests that look like grand tours, then they should break down those tests before automating them.

#4 Pick the Right Increments

With testing tools these days, teams can get automated tests up and running faster than ever. I know I’m not that old, but earlier in my career, we had to DIY much of our test automation projects. Nevertheless, successful projects still take time to build, and the total value test automation offers comes in increments. Remember, automation requires a development effort, regardless of the chosen tools.

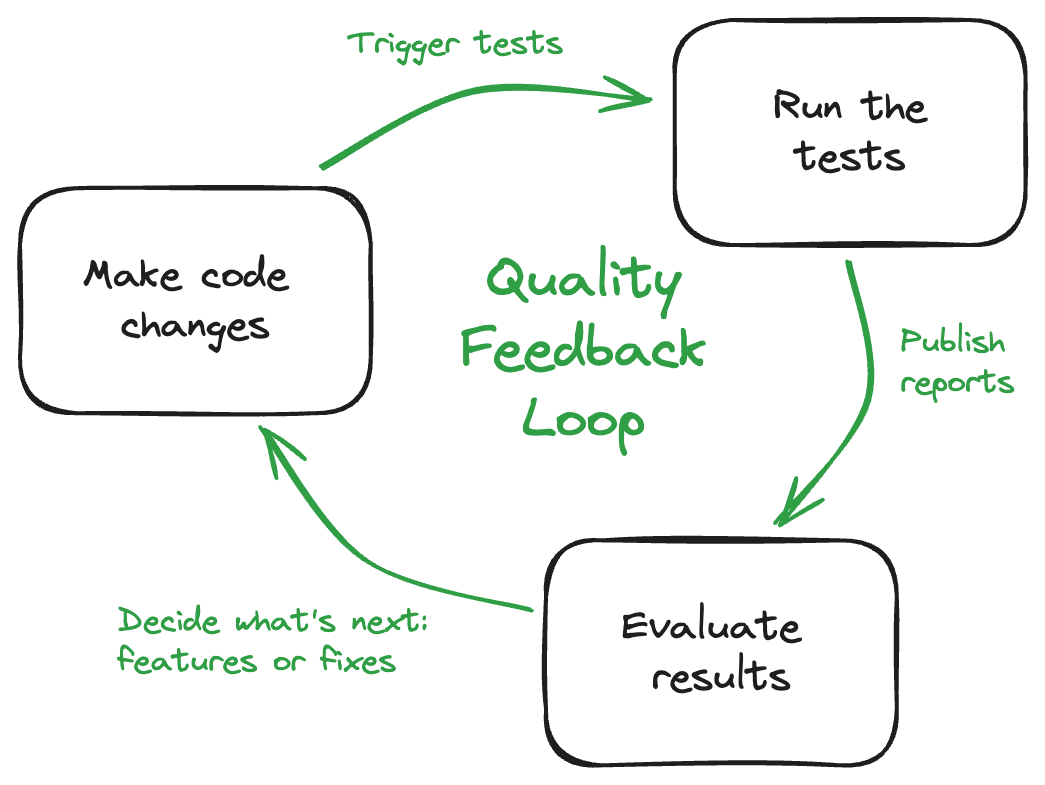

The most important aspect to build in the first iteration is the full feedback loop. Even before writing the first test, set up the systems for running tests automatically and then reporting results back to the team. Typically, this involves some sort of Continuous Integration system or build pipeline. Teams may choose to run tests continuously (after every code change) or periodically (every few hours or nightly). The feedback loop guarantees that tests will actually run and results will actually be reported.

Then, future iterations should prioritize the next sets of tests to automate. These may come from a backlog of existing manual tests to burn down or from a sprint plan. Pick tests that cover the most valuable behaviors. Iteration by iteration, the team will build up test coverage. Since the feedback loop would already be in place, new tests should automatically become included in regular runs.

#5 Pick the Right Metrics

As a team perseveres in their transition towards automated testing, their automation project will grow and grow. Leadership should track the progress the team is making and the impact automation is having. Picking the right metrics is important. The right metrics ensure that automation is making a difference. The wrong metrics drive a team into poor practices. Here are three values to track for test automation, together with the right and wrong metrics for measuring them.

First, make sure that growth in automation means gains in test coverage. Many times, I’ve seen teams track the number of tests they automate each sprint. Test counts are a terrible metric because they don’t indicate coverage. Teams incentivized to push high test counts will write lots of small tests as fast as they can, possibly cutting corners or covering behaviors that are not important. Instead, teams should track feature coverage – and specifically changes in coverage. As automation grows, automated suites should cover an increasing percentage of the features in the product under test. Feature coverage is difficult to measure. Reasonable ways to measure it are by linking tests to user stories or by instrumenting product builds to trace coverage during test runs.

Second, make sure that automation is catching real issues. Teams love to track bug counts, but like test counts, that’s not a great metric. Legitimate bugs are present in the product, whether or not tests catch them. Incentivizing a team to increase bug count will encourage folks to report bugs that may not matter just to hit quotas. Instead, for automated tests, teams should track the reasons for test failures. Automated tests may fail for four main reasons: product bugs, automation bugs, outdated tests, and environment issues. Failures and bugs will inevitably happen, so teams should strive to minimize failures caused by reasons other than product bugs.

Third, make sure that automation helps the team move faster. Speed is one of automation’s major value propositions. With automated tests, teams should be able to run tests more quickly and more frequently, providing feedback to guide the next iteration of development. Metrics should focus on minimizing the time to get feedback from testing. Some teams try to track the amount of time it takes to automate a test or to run a test – and those are reasonably good things to check – but ultimately, what matters is how much time it takes to get test results and bug reports from the time a code change is committed. Automated tests should be able to reveal basic functional problems much faster than manual testing cycles can. They should also free up the team’s time to work on other things.

The table below summarizes these metrics. This list is not exhaustive, but it’s a good start.

|

Value to measure |

Wrong metrics |

Better metrics |

|

Are we gaining coverage? |

Test counts |

Coverage increases |

|

Are we catching bugs? |

Bug counts |

Failure reason categories |

|

Are we moving faster? |

Time-to-automate |

Time-to-feedback |

Following the Playbook

In short, the least painful way to transition from manual to automated testing takes five steps:

- Pick the right goals

- Pick the right resources

- Pick the right tests

- Pick the right increments

- Pick the right metrics

It’s not a perfect process, but it will put you and your team on the right path.

Frequently Asked Questions

Why is automated testing so hard? It's more than just recording steps. You're essentially building a software system on top of your product, which can lead to unexpected issues and require a deep understanding of software development principles. Managing test data effectively adds another layer of complexity.

Is it realistic for manual testers to learn automation? Absolutely, but it's a journey, not a sprint. Some testers thrive in this transition, while others prefer to focus on exploratory testing, which remains crucial. No-code tools can help bridge the gap, but they have limitations. Consider a balanced approach, supporting those eager to learn coding and automation while valuing the expertise of exploratory testers.

What's the biggest mistake companies make when starting automation? Focusing on the wrong goals. Many teams aim to automate a specific number of tests, which can lead to automating low-value tests just to hit targets. Instead, define goals based on business outcomes, like faster release cycles or reduced time spent on regression testing.

How do I choose which tests to automate first? Prioritize tests that offer the highest return on investment. Focus on frequently executed regression tests, tests covering critical functionalities, and tests prone to human error. Start with the "happy path" scenarios – the most common user flows – before tackling edge cases.

How can I measure the success of my test automation efforts? Track metrics that reflect real value, not just activity. Instead of counting tests, measure improvements in feature coverage. Monitor the reasons for test failures, aiming to minimize issues unrelated to actual product bugs. Finally, track how automation impacts the speed of your feedback loop, from code commit to test results and bug reports.