- Developers test expected behavior; QA tests real user behavior. Unit tests catch logic errors, but they don’t protect end-to-end experiences.

- Speed without QA creates false velocity. Teams ship faster at first, then lose weeks to firefighting and rollbacks.

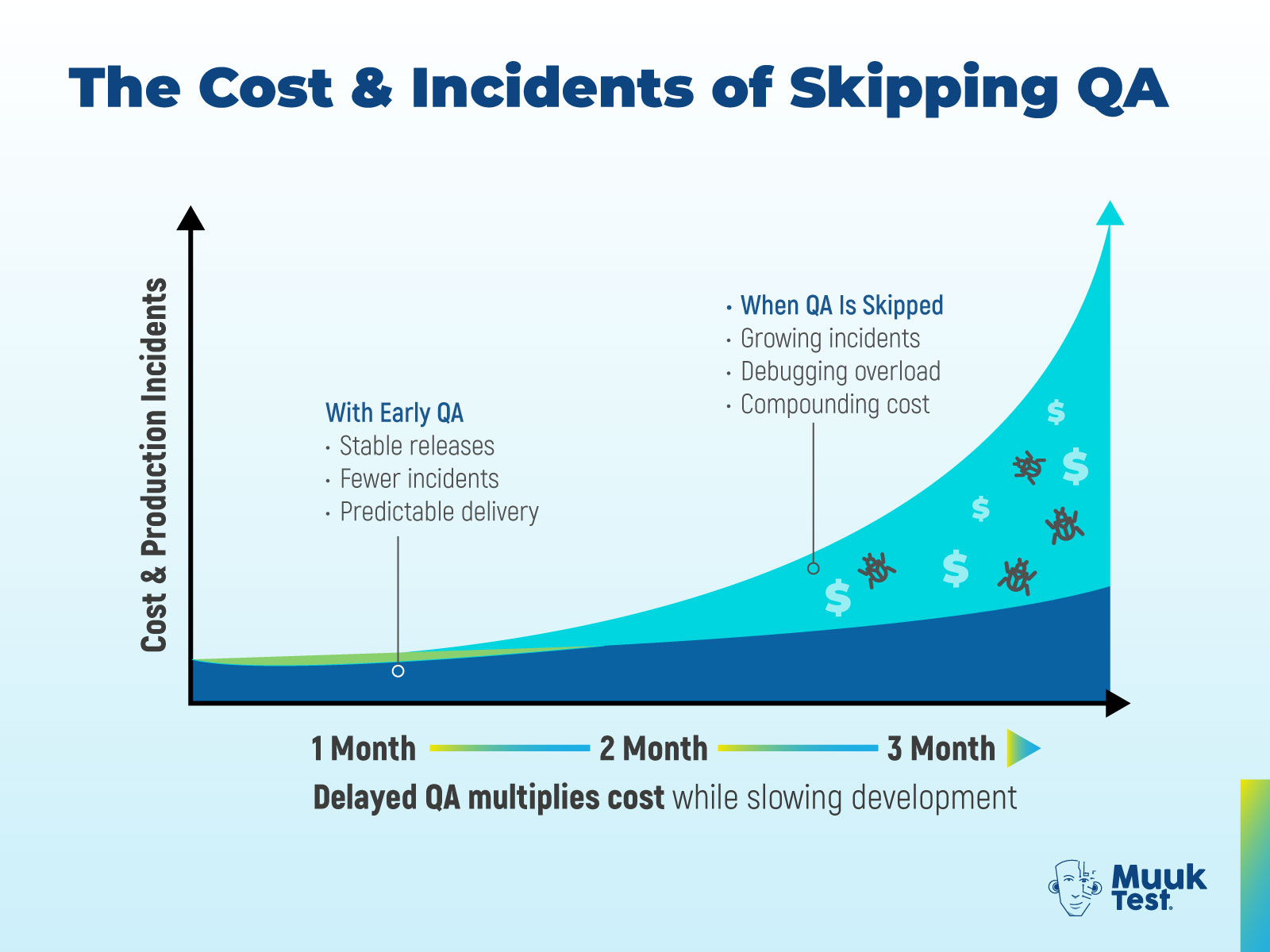

- Skipping QA doesn’t remove cost - it delays it. Quality debt compounds until debugging replaces building.

- Early success hides systemic risk. What works at 100 users often breaks at 10,000. Eventually, customers pay the price. And when they do, trust is the first thing lost.

This post is part of a 4-part series, From Speed to Trust: The QA Maturity Journey for Scaling Software Teams:

- The Dev-Only Startup Dream: Why Skipping QA Breaks Software Teams ← You're here

- When Customers Become Testers: The Real Cost of Missing QA - January 20th, 2026

- From Chaos to Control: How QA Stabilizes Software Teams - January 27th, 2026

- Quality as a Growth Engine: Beyond Bug Prevention - February 3rd, 2026

What Is the Dev-Only Startup Dream?

A new company is born, and so is its optimism. Every day, whiteboards overflow with ideas, Slack channels hum past midnight, and each code merge feels like another brick laid toward greatness. In this whirlwind, one decision quietly slips in under the disguise of good intentions: “Let’s skip QA for now — developers can test their own code. Testing isn’t rocket science anyway.”

On paper, it sounds reasonable. You’re running lean, chasing investors, and every new hire feels like a luxury you can’t afford. I’ve sat in that exact room many times. And almost every time, the same story unfolds: in the beginning, everything looks fast, sharp, and beautiful… until, inevitably, it cracks.

Can Developers Really Handle QA on Their Own?

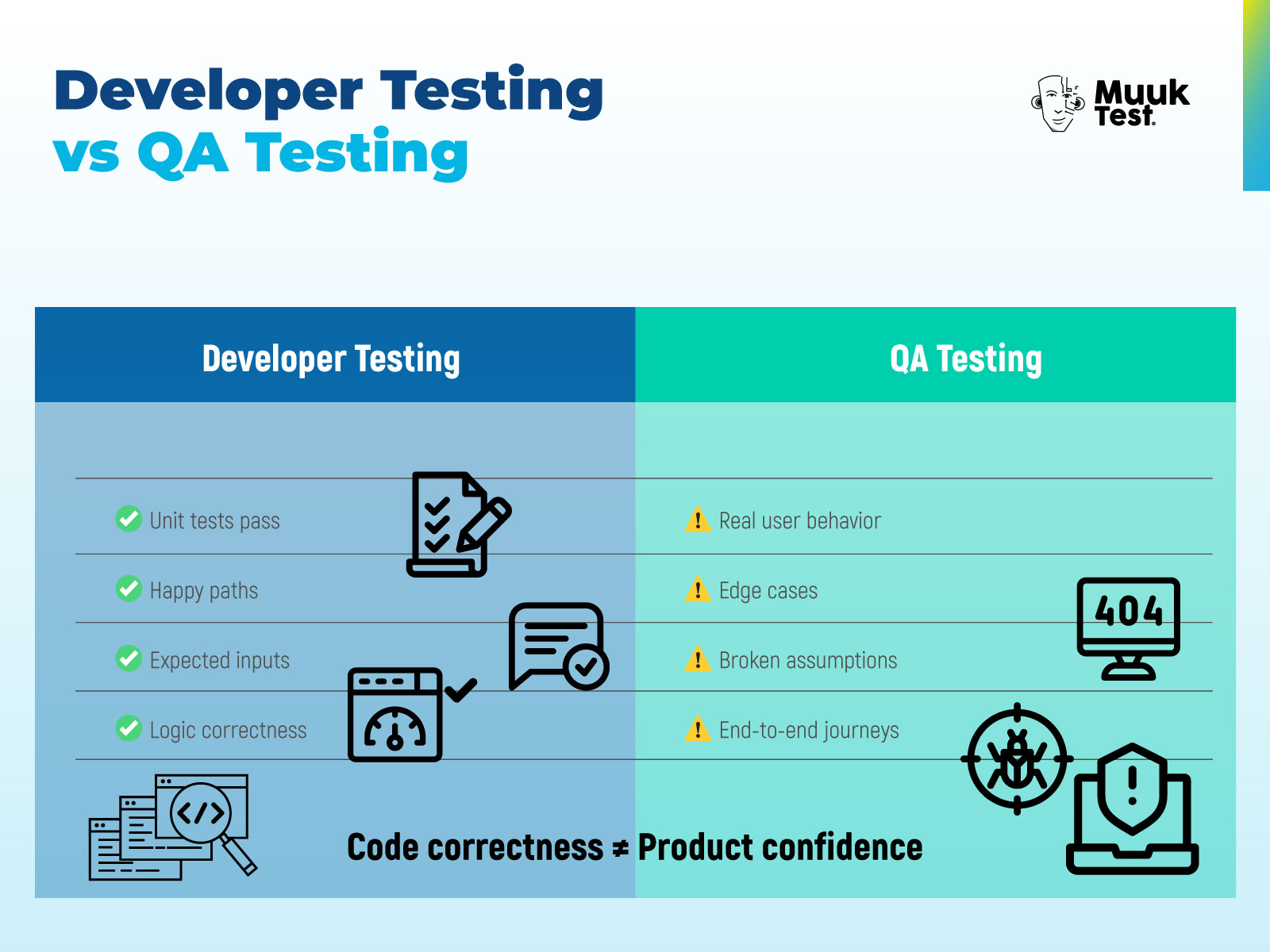

In theory, developers understand their code better than anyone else. They write unit tests, they review each other’s commits, and they genuinely believe those tests are enough. But as I learned painfully early in my career, code correctness is not the same as product confidence.

Developers test what they expect the system to do. QA tests what the user might actually do.

Take a fintech startup I advised in 2019. The engineers had 90% unit test coverage and continuous integration was humming perfectly. Yet, during their first public demo, a customer entered a space at the end of her name, and the payment API crashed.

Sounds funny? It wasn’t. This wasn’t a coding failure; it was a thinking failure. No one had tested real human behavior.

As Martin Fowler often reminds us, 100% test coverage measures lines, not learning. Unit tests guard the logic; QA guards the experience.

What Happens When Teams Move Fast Without QA Structure?

The early months feel like flying. Features ship daily, customer feedback loops are fast, and investors beam at your velocity charts. But speed without structure is like sprinting through fog — you can’t see the cliff edge.

Developers start shipping directly from local branches, often late at night, followed by the Slack message: “Pushed hotfix, please verify.”

There’s no dedicated test environment. No regression list. No one pauses to ask, “What if this breaks checkout?”

Very quickly, “done” stops meaning done.

I recall an IoT startup that built connected air-quality sensors. Their firmware developers were brilliant — ex-research lab engineers who believed QA could wait until after launch. Within six months, device recalls wiped out nearly 12% of their Series A funding. A minor calibration flaw that multiplied across thousands of devices because no one had tested the sensors under real-world humidity variations.

What began as a cost-saving decision became a full-blown credibility crisis.

What Is the Hidden Cost of Delaying QA?

Skipping QA isn’t an avoidance of cost — it’s a deferral with interest.

As Accelerate highlights, elite engineering teams consistently achieve both speed and stability because they build quality early. Teams that postpone QA accumulate quality debt, a cousin of technical debt that doesn’t appear on financial statements but quietly drains productivity.

- Every untested scenario adds a layer of fragility.

- Every quick fix becomes a loan against future stability.

- And like any loan, the interest compounds quietly — until the system eventually collapses under its own weight.

In one of my early transformation engagements, we illustrated this using a simple metaphor: the quality interest rate. Every month without meaningful regression testing increased production incidents by nearly 15%. By the third month, developers were spending 40% of their time debugging instead of building. Momentum died. Morale dipped. Velocity became an illusion.

The CFO didn’t need a QA lecture after that. The spreadsheet told the story.

Why Early Success Can Hide Serious Quality Risks

Ironically, for a while, everything looks perfect. The MVP ships. Users sign up. Investors applaud your “lightning-fast delivery.” Everyone starts believing you’ve outsmarted the rulebook.

But early success hides defects the way a calm sea hides rocks. As traction grows, integration boundaries stretch. Data becomes messy. And “works on my machine” turns from a joke into a warning.

Then, one ordinary morning, something breaks in production — often the simplest thing.

- A date format mismatch.

- A misconfigured feature flag.

- A missing null check.

Suddenly, speed turns into firefighting. You rush a patch. The patch breaks something else. You roll back. The rollback introduces another issue.

Welcome to the endless loop of post-release chaos.

As Gene Kim wrote in The Phoenix Project: “When you’re always firefighting, you forget the last time you built something new. That line lives rent-free in my head — because I’ve seen it unfold far too many times.

What Skipping QA Reveals About Engineering Culture

The absence of QA does not just expose bugs, it exposes beliefs. It reveals how a company values craftsmanship. When leaders say, “we'll add QA later”, what seems here is, quality is optional. That message seeps into every conversation. The developers rush merges, product managers chase timelines over test coverage. The retrospectives are skipped because there is no time. Quality stops being a shared responsibility and becomes a whisper no one has time for.

In contrast, the best teams that I have seen, even with 5 people, build micro-rituals of quality early. They hold mini-demos, create shared bug lists, and celebrate catching issues before customers do. They understand that QA is not a department, but a discipline.

Why Unit Tests Alone Are Not Enough for Modern Software

Unit tests answer, does this method work?

QA asks, does the user's journey succeed?

When you integrate multiple APIs, devices, and browsers, the surface area for risk explodes. No amount of isolated tests can replicate real user variance. A unit test might confirm that, for example, the discount fraction applies correctly. But it's only a QA who can catch that combining a coupon and wallet credit triggers a database rollback under certain text regions.

That is why frameworks like continuous testing for DevOps professionals emphasize layered validation. Unit, integration, system and exploratory. Skipping layers is like building a bridge tested only for wind, not for trucks.

The Turning Point - When Skipping QA Finally Breaks Down

Eventually, something happens that forces reflection, an outage, a client escalation, or a product recall. That's when the leadership said, we need QA yesterday. But it's not just about hiring testers, it's about unlearning old habits.

- Developers must stop viewing QA as a post-production policy.

- Product managers must stop equating QA with delays.

- Executives must start testing as early as possible.

As Jez Humble once said, “if it hurts, do it more often”. Testing should not be a phase to survive, but a rhythm to sustain. That's the moment when companies realize you can't bolt quality on, you have to bake it in.

Case Study: The FinTech “Speed Trap”

Let me share one anonymous but very real story.

A fintech startup (let’s call it PayZen) launched a peer-to-peer payment app in record time — six months from concept to live users. They had no QA team; developers wrote extensive unit tests and used mocks for APIs.

At 100 users, it worked fine.

At 10,000 users, concurrency issues caused transaction duplication.

At 100,000, those duplicates hit actual bank accounts.

It took six weeks of crisis response, three external audits, and one regulatory warning to restore confidence. The CTO later told me, “We thought QA would slow us down; not having QA nearly killed us.”

Their recovery phase (which we’ll explore in Part 3) became a case study in our internal QA leadership workshops. The most expensive lesson in “false velocity.”

Conclusion: Where the Dev-Only Dream Breaks

The dev-only startup dream is built on optimism. No team wakes up intending to ship broken software. The decision to skip QA usually comes from good intentions: move fast, conserve cash, prove momentum.

But reality is unforgiving.

As systems grow, complexity multiplies. Integration boundaries stretch. Human behavior becomes unpredictable. And without QA, teams don’t just miss bugs—they miss understanding. What once felt like speed slowly turns into fragility. Releases become stressful. Hotfixes replace progress. Developers stop trusting their own code.

Eventually, something forces the issue. An outage. A public incident. A customer escalation. That’s the moment when the illusion finally breaks and leadership realizes: quality can’t be bolted on after the fact - it has to be built in from the start.

And when QA is missing internally, the consequences don’t stay internal.

Customers become the test team.

Bugs escape into production. Jira tickets turn into public reviews. Screenshots replace bug reports. Trust erodes faster than features can ship.

👉 Don’t miss Part 2: When Customers Become Testers — The Real Cost of Missing QA, where we follow what happens when users pay the price for missing quality.

Frequently Asked Questions

Is it okay for startups to skip QA in the early stages?

Skipping QA may feel reasonable early on, but it creates hidden risk. While developers can validate logic with unit tests, they can’t reliably test real user behavior, integrations, or edge cases. As the product scales, these gaps turn into production failures, rework, and lost trust.

Can developers replace QA by testing their own code?

No. Developers and QA play different roles. Developers test whether code works as intended. QA tests whether the product works in real-world conditions. Without QA, teams often miss end-to-end journeys, unexpected user behavior, and system interactions that unit tests don’t cover.

What are the risks of dev-only testing?

Dev-only testing often leads to false confidence. Teams ship faster at first, but without QA structure, defects escape into production, regression issues multiply, and releases become stressful. Over time, developers spend more time fixing bugs than building features.

Why do unit tests alone not prevent production bugs?

Unit tests validate isolated pieces of code, not full user journeys. Production bugs often come from integrations, configuration changes, data inconsistencies, or real user behavior—areas that unit tests are not designed to catch. QA focuses on these higher-risk scenarios.

What is the real cost of skipping QA?

Skipping QA doesn’t eliminate cost; it delays it. Defects found in production take significantly longer to fix and often involve customer support, refunds, downtime, and reputation damage. This “quality debt” compounds over time and eventually slows development velocity.

When do teams usually realize skipping QA was a mistake?

Most teams realize it after a forcing event: a major outage, a failed release, customer complaints, or a public incident. By then, trust has already been damaged, and rebuilding quality is harder than starting early.

How does skipping QA affect engineering culture?

When QA is absent, quality becomes optional. Teams rush merges, skip retrospectives, and normalize firefighting. Over time, developers lose confidence in their releases, morale drops, and delivery becomes reactive instead of predictable.