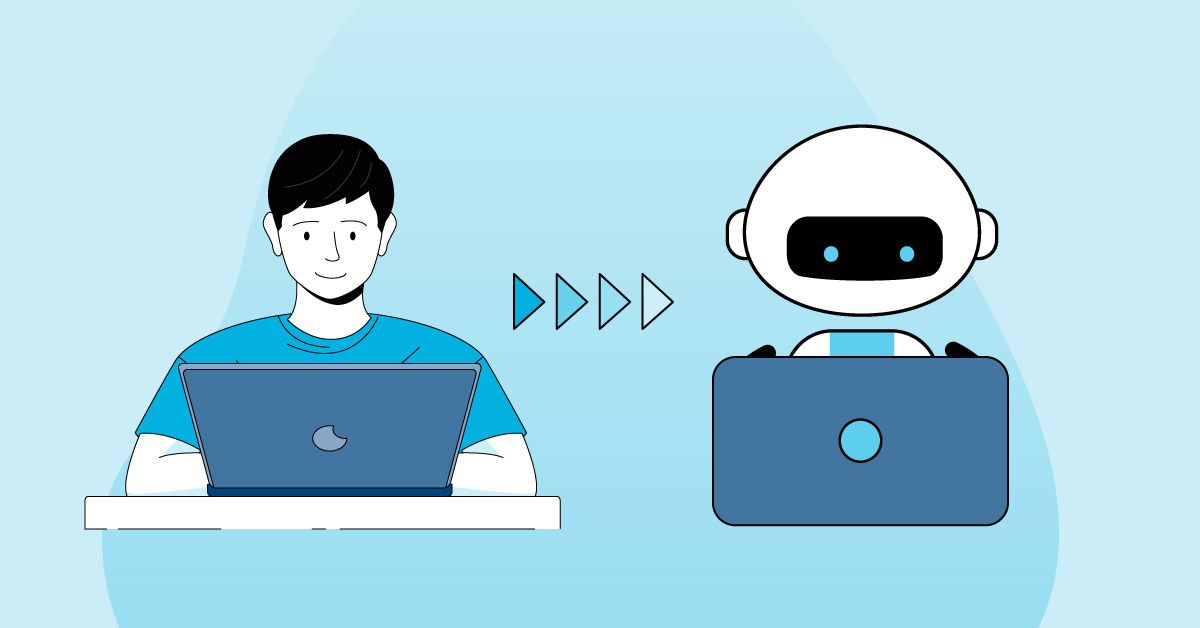

AI testing tools excel at the easy 80% but consistently miss the high-risk 20%. They automate predictable flows well, but struggle with dynamic data, branching logic, async behavior, integrations, and real-world edge cases, where most critical bugs actually hide. The hard 20% of testing requires human judgment, not just automation. AI can generate steps, but it can’t understand intent, risk, business rules, user roles, or the messy variability of real usage. High-impact test cases still need human-designed coverage. Forcing AI tools into complex scenarios triggers a flakiness spiral. Teams fall into endless re-recordings, retries, quarantines, and brittle tests that break constantly, eroding engineer trust and letting real regressions slip through. Real QA maturity comes from strategy, not tool volume. AI can accelerate throughput, but only a hybrid approach: AI + human insight, delivers true reliability. Without that strategy, automation becomes noise instead of protection. This post is part 2 of a 4-part series on The Real ROI of AI Testing Tools - From Illusion to Impact: Why DIY AI Testing Tools Only Cover the Easy 80% Why DIY AI Testing Tools on their own Struggle with the Hard 20% ← You're here How CTOs Can Maximize ROI from AI Testing Tools - Dec 9, 2025 MuukTest’s Hybrid QA Model: AI Agents + Expert Oversight - Dec 16, 2025

.png)