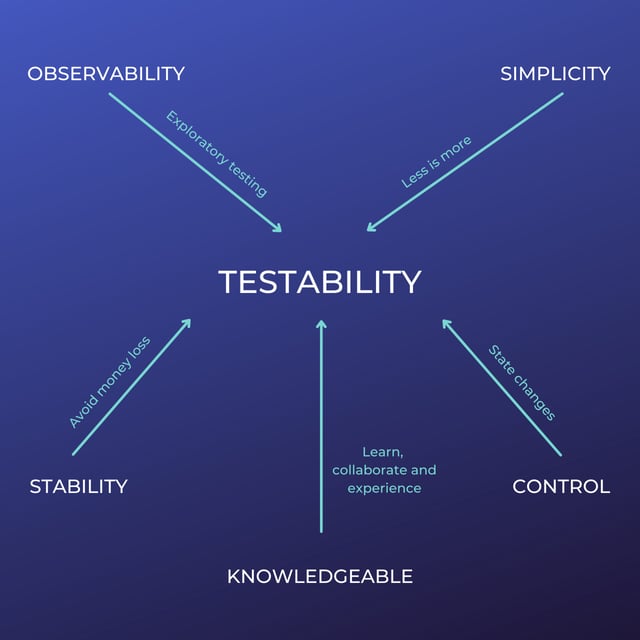

Ever feel like you're fumbling in the dark when testing software? The culprit might be low testability. It's a common struggle, but thankfully, one we can fix. This post breaks down five key characteristics of highly testable software, offering practical advice and real-world examples to make your testing process way less painful. We'll cover how simplicity, observability, control, knowledge, and stability dramatically improve testability in software engineering, leading to faster releases and higher quality software.

One option is to ask Developers and Business Analysts, but what about when testing was not considered when requirements were created, or code was implemented? In those cases, testing a new application or service could be challenging as there are no clear expectations.

It may be hard to drive testability from a testing perspective. Still, we need to work together. The Development Team and the Business Team should collaborate to achieve the testability of our requirements or artifacts. In our experience, we used the following steps to improve testability in software engineering.

Five Characteristics to Build Testability in Software

Key Takeaways

- Prioritize testability for easier debugging and higher quality software: Simpler designs, improved observability, and greater control make testing more efficient and effective. Clear requirements and a skilled testing team are also essential for success.

- Use practical techniques to improve testability: Explore methods like Test-Driven Development (TDD), Built-in Test (BIT), and various testability metrics to enhance your testing process. Formal testability analysis throughout the development lifecycle is also crucial.

- Balance testability with other important factors: While testability is important, don't neglect user experience and security. A balanced approach ensures high-quality software that is both user-friendly and easy to test.

What is Testability?

Testability simply refers to how easily a software component or system can be tested. More formally, as defined by Wikipedia, "Testability is the degree to which a software artifact supports testing in a given test context." This context includes the software itself, the testing methods, available resources, and the overall testing environment. High testability contributes significantly to other desirable software qualities, like maintainability and reliability. Think of it like this: a well-organized closet (high testability) makes it easy to find what you need (efficient testing), whereas a cluttered one (low testability) leads to frustration and wasted time.

Testability in a Broader Context

The concept of testability extends beyond software. In research, it's closely related to falsifiability and reproducibility—the ability to prove a theory wrong or replicate results consistently. Testability plays a crucial role even in fields like psychology, ensuring that measurements and tests accurately determine the validity of a premise. For example, if a psychological study's methods are clearly defined and repeatable by other researchers, its findings are considered more testable and, therefore, more reliable. This principle applies equally to software development. Testable code allows for easier debugging and updates, ultimately creating a more robust end-product. Services like those offered by MuukTest can help ensure your software achieves high testability from the start.

Adding Simplicity

Simplicity means creating the most straightforward possible solutions to the problems at hand. Reducing the complexity of a feature to deliver only the required value helps testing minimize the scope of functionality that needs to be covered.

Never be afraid to ask about removing complexity from business requirements; less is more in this situation. As part of the definition of “done”, we need to have expected results in every story. If that is not feasible, we must at least have a clear idea of customer expectations.

Improve Observability

Observing the software and understanding different patterns gives us a tremendous advantage in catching gaps or errors. Exploratory Testing can improve observability. Sometimes, we need to learn from our applications; observing is core to exploring multiple behaviors and paths while testing.

Improving log files and tracking allows us to monitor system events and recreate problems, an additional benefit of enhancing ongoing supportability.

Control

Control is critical for testability, particularly so if required to perform any test automation. Cleanly controlling the functionality to manage the state changes within the system in a deterministic way is valuable to any testing efforts and is a basic element of a test automation strategy.

I suggest focusing on what we can expect (expected results). A simple approach: grabbing customer-centric scenarios and identifying specific outcomes. This way, we can do test automation. Otherwise, automating something unexpected or unpredictable is chaotic.

“I’ll show these people what you don’t want them to see. A world without rules and controls, without borders or boundaries. A world where anything is possible.”

NEO, The Matrix Franchise

Be Knowledgeable

As testers, we must be subject matter experts on the application, take advantage of that learning or new user experience, and collaborate. It is crucial to share the knowledge with the rest of the team and continuously learn from others. This is a never-ending journey.

Involving testers brings a wealth of testing knowledge and context to any software discussion. Team members must work together to understand the essential quality attributes, critical paths, core components, and associated risks in a design that allows the team to mitigate those risks in the most effective way.

Testing Stability

It is tough to test a system with functional variability in high levels of operational faults. Nothing hinders testing like an unstable system. We cannot create automation test scripts or Performance test scripts with applications continuously changing or failing.

Stability can be tricky. Unstable systems can destroy the application’s reputation and result in financial loss. Therefore, it is essential to get a stable version of the application to create our tests before starting any test automation.

Factors Affecting Testability

Several factors influence a system's testability. While simplicity, observability, control, and stability are essential, other elements play a crucial role. Understanding these factors helps teams build more testable software from the ground up.

Requirements, Design, and the Tester's Skill

Clear, concise, and measurable requirements are the bedrock of effective testing. A well-structured software design, featuring loose coupling and comprehensive documentation, simplifies the testing process. Think of it like building a house: a clear blueprint makes construction much smoother. The tester's skill and experience also play a significant role. A seasoned tester can identify potential issues more readily and design more effective test cases. Their motivation contributes to thoroughness and a proactive approach to finding bugs.

Isolatability, Understandability, and Automatability

Isolating components for testing, writing understandable code, and designing for test automation are key factors. The easier it is to isolate a component, the simpler it is to pinpoint the source of a bug. Understandable code makes it easier for anyone on the team to contribute to testing efforts. And designing for test automation streamlines repetitive tests, freeing up testers to focus on more complex scenarios.

Complexity, Team Dynamics, and System Size

Project complexity, team size, and the overall system size can introduce challenges to testing. Larger teams, complex systems, and numerous stakeholders can make thorough testing more difficult. Effective communication and collaboration become even more critical in these scenarios to ensure everyone is on the same page and potential issues are addressed promptly.

Testing Time and Planning

Sufficient testing time and a well-defined test plan are crucial for success. A comprehensive plan ensures efficient use of testing resources and improves overall testability. Adequate time allows for thorough testing, exploration of edge cases, and proper analysis of results. Rushing the testing process can lead to missed bugs and ultimately compromise the quality of the software.

Techniques and Practices for Improved Testability

Test-Driven Development (TDD)

Test-driven development (TDD) is a powerful technique where tests are written before the code. This approach ensures testability is baked in from the very beginning. TDD not only improves testability but also leads to more modular and maintainable code, making it easier to adapt to future changes.

Built-in Test (BIT)

Especially relevant for hardware and embedded systems, built-in test (BIT), including types like P-BIT, C-BIT, and O-BIT, allows for automated self-testing. This built-in capability can significantly reduce the time and effort required for external testing, allowing for quicker identification and resolution of hardware-related issues.

Metrics for Measuring Testability

Metrics such as code coverage, cyclomatic complexity, and maintainability index provide quantifiable measures of testability. These metrics can help identify areas for improvement and track progress over time. Tools like SonarQube can assist in gathering and analyzing these metrics, providing valuable insights into the codebase's testability.

Practical Examples and Solutions

Specific techniques like adding unique identifiers for UI testing, creating APIs for test data setup, and improving logging can greatly enhance testability. These practical solutions make it easier to interact with the system under test, prepare test data, and diagnose issues. For example, unique identifiers simplify UI automation, while robust logging provides a clear audit trail for debugging.

Unit Testing and Modular Design

Employing unit testing frameworks, writing small, independent modules, and using dependency injection and mock objects are valuable practices. These techniques promote isolation and control, making unit testing more effective and contributing to overall testability. Modular design simplifies testing by breaking down complex systems into smaller, more manageable units.

Types of Software Testing

Different types of software testing, including static analysis, dynamic analysis, and functional analysis, contribute to a comprehensive testability assessment. Each type provides unique insights into the software's behavior and potential vulnerabilities, ensuring a well-rounded testing approach.

Testability Analysis as a Process

Testability analysis should be a formal process integrated into the product development lifecycle. Early analysis, collaboration between design, test, and maintenance teams, and techniques like FMECA (Failure Mode, Effects, and Criticality Analysis) are essential for success. This process helps meet product requirements for fault detection and isolation, often defined by specific percentages or the number of replaceable units (SRUs). Early involvement of testing teams ensures testability considerations are addressed from the outset.

Risks of Over-Focusing on Testability

While crucial, overemphasizing testability can have drawbacks. It's important to strike a balance with other critical aspects like user experience and security. A laser focus on testability might lead to overly complex designs that hinder usability. Misinterpreting testability metrics can also lead to a false sense of security regarding software quality.

Benefits of Testability

Improved testability offers numerous benefits, including faster product releases, reduced bug fixing costs, increased customer satisfaction, better teamwork, a clearer understanding of requirements, and more flexible software. Investing in testability ultimately leads to higher quality, more maintainable software that can adapt to evolving needs. Consider partnering with a company like MuukTest to achieve comprehensive test coverage within 90 days, significantly enhancing your test efficiency and coverage.

Key Figures in Testability Research

Karl Popper's concept of falsifiability, a cornerstone of scientific theory, has significantly influenced the understanding of testability in software development. His work highlights the importance of designing tests that can potentially disprove a hypothesis, rather than just confirm it, leading to more robust and reliable software.

Final Thoughts

When we talk about high-quality applications, testability must be there; a system so complicated and unable to provide atomic checks could be hard to integrate. In addition, testability can improve unit testing, API testing, integration testing, performance testing, and others.

Testability can increase confidence in our test results through better visibility of the states and mechanisms on which these results are available. However, keep in mind testability is not a magic wand. It is vital to constantly communicate with the team and guide them about testability during requirement designs, coding, and implementation.

Happy Bug Hunting.

Frequently Asked Questions

What should be the mindset of a software tester?

A tester mindset is constantly recognizing and evaluating assumptions in order to determine if these are valid and valuable, or are misleading their testing activities.

How do you ensure that software meets quality standards?

A structured QA strategy along with regular testing is a way to ensure software quality. Multiple testing cycles combined with automation will catch the bugs that other testing strategies may have missed.

How could to improve as a software tester?

Learn about different testing techniques, get familiarized with different software testing tools, and continuously practice skills for improvement.